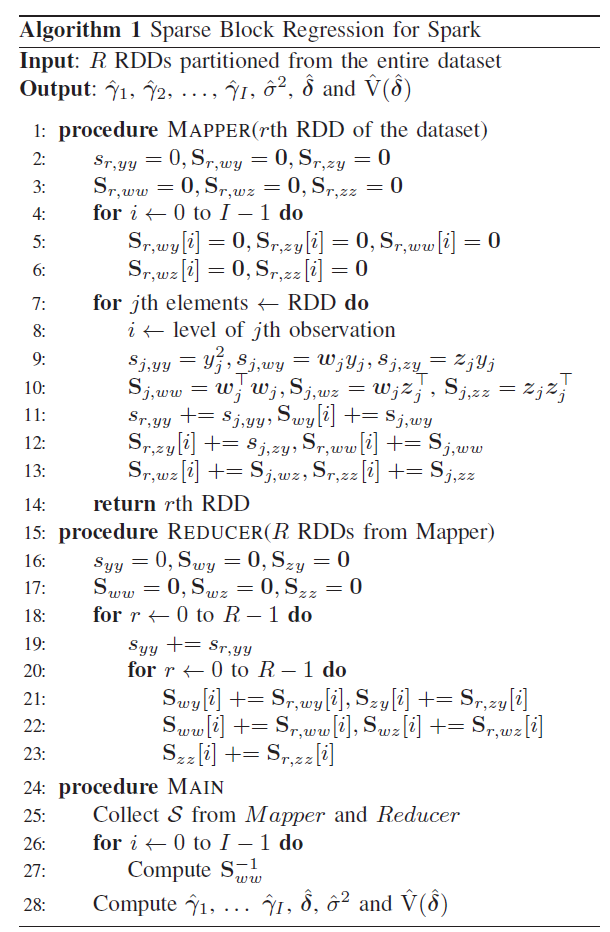

Algorithm

AlgorithmAbstract

Categorical variables are nominal variables that classify observations by groups. The treatment of categorical variables in regression is a well-studied yet vital problem, with the most popular solution to perform a one hot encoding. However, challenges arise if a categorical variable has millions of levels. It will cause the memory needed for the computation far exceeds the total available memory in a given computer system or even a computer cluster. Thus, it is fair to state that one hot encoding approach has its limitations when a categorical variable has a large number of levels. The common workaround is the sparse matrix approach because it requires much fewer resources to cache the dummy variables. However, existing sparse matrix approaches are still not sufficient to handle extreme cases when a categorical variable has millions of levels. For instance, the number of subnets in network traffic analyses can easily exceeds tens of millions. In this paper, we proposed an innovative approach called sparse block regression (SBR) to address this challenge. SBR constructs a sparse block matrix using sufficient statistics. The benefits include but not limited to: 1) overcome the memory barrier issue caused by one hot encoding, 2) obtain multiple models with a single scan of data stored in the secondary storage; and 3) update the models with simple matrix operations. The study compared proposed SBR against conventional sparse matrix approaches. The experiments proved that SBR can efficiently and accurately solve the regression problem with large category number. Compared to the sparse matrix approach, SBR saved 90% memory in size during the computation.