Baijian Yang

Associate Dean for Research and Professor of Computer and Information Technology

Purdue University

Biography

Dr. Baijian “Justin” Yang is the Associate Dean for Research at Purdue Polytechnic Institute and a Professor in the Department of Computer and Information Technology at Purdue University. He earned his Ph.D. in Computer Science from Michigan State University and holds both an M.S. and B.S. in Automation (EECS) from Tsinghua University. With a broad expertise in computer and information technology, Dr. Yang has authored over 100 peer-reviewed publications. His recent research focuses on applied machine learning, big data, and cybersecurity. He has also contributed to the field through leadership roles, serving as a board member for the Association of Technology, Management, and Applied Engineering (ATMAE) from 2014 to 2016 and as a member of the IEEE Cybersecurity Initiative Steering Committee from 2015 to 2017. Beyond academia, Dr. Yang holds several industry certifications, including CISSP, MCSE, and Six Sigma Black Belt. He has also authored two books on Windows Phone programming. Download my resumé.

- Applied Machine Learning

- Big Data

- Cybersecurity

PhD in Computer Science, 2002

Michigan State University

MS in Automation (EECS), 1998

Tsinghua University

BS in Automation (EECS), 1995

Tsinghua University

Experience

Courses Taught

Projects

Featured Publications

Recent advances in high-throughput molecular imaging have pushed spatial transcriptomics technologies to subcellular resolution, which surpasses the limitations of both single-cell RNA-seq and array-based spatial profiling. The multichannel immunohistochemistry images in such data provide rich information on the cell types, functions, and morphologies of cellular compartments. In this work, we developed a method, single-cell spatial elucidation through image-augmented Graph transformer (SiGra), to leverage such imaging information for revealing spatial domains and enhancing substantially sparse and noisy transcriptomics data. SiGra applies hybrid graph transformers over a single-cell spatial graph. SiGra outperforms state-of-the-art methods on both single-cell and spot-level spatial transcriptomics data from complex tissues. The inclusion of immunohistochemistry images improves the model performance by 37% (95% CI: 27–50%). SiGra improves the characterization of intratumor heterogeneity and intercellular communication and recovers the known microscopic anatomy. Overall, SiGra effectively integrates different spatial modality data to gain deep insights into spatial cellular ecosystems.

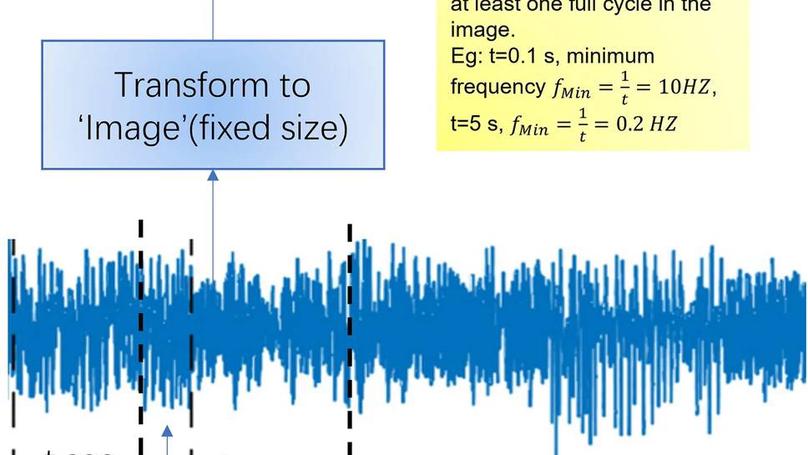

As many as 80% of critically ill patients develop delirium increasing the need for institutionalization and higher morbidity and mortality. Clinicians detect less than 40% of delirium when using a validated screening tool. EEG is the criterion standard but is resource intensive thus not feasible for widespread delirium monitoring. This study evaluated the use of limited-lead rapid-response EEG and supervised deep learning methods with vision transformer to predict delirium. This proof-of-concept study used a prospective design to evaluate use of supervised deep learning with vision transformer and a rapid-response EEG device for predicting delirium in mechanically ventilated critically ill older adults. Fifteen different models were analyzed. Using all available data, the vision transformer models provided 99.9%+ training and 97% testing accuracy across models. Vision transformer with rapid-response EEG is capable of predicting delirium. Such monitoring is feasible in critically ill older adults. Therefore, this method has strong potential for improving the accuracy of delirium detection, providing greater opportunity for individualized interventions. Such an approach may shorten hospital length of stay, increase discharge to home, decrease mortality, and reduce the financial burden associated with delirium.

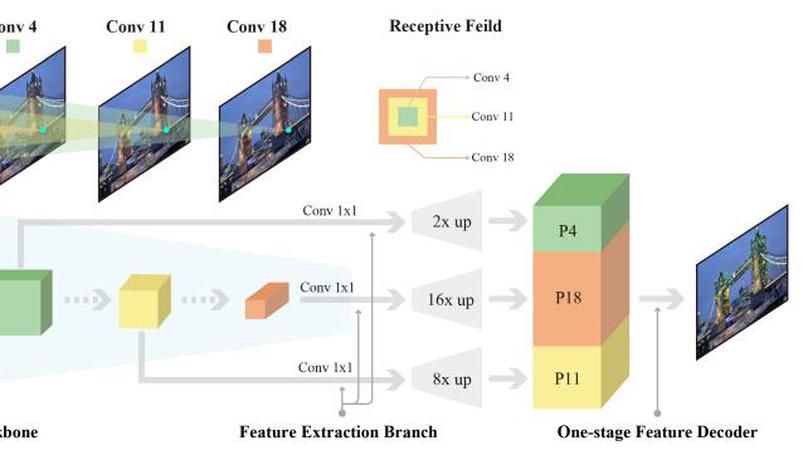

In this work, we introduce a Denser Feature Network (DenserNet) for visual localization. Our work provides three principal contributions. First, we develop a convolutional neural network (CNN) architecture which aggregates feature maps at different semantic levels for image representations. Using denser feature maps, our method can produce more keypoint features and increase image retrieval accuracy. Second, our model is trained end-to-end without pixel-level annotation other than positive and negative GPS-tagged image pairs. We use a weakly supervised triplet ranking loss to learn discriminative features and encourage keypoint feature repeatability for image representation. Finally, our method is computationally efficient as our architecture has shared features and parameters during computation. Our method can perform accurate large-scale localization under challenging conditions while remaining the computational constraint. Extensive experiment results indicate that our method sets a new state-of-the-art on four challenging large-scale localization benchmarks and three image retrieval benchmarks.

Recent Publications

Contact

- byang AT purdue.edu

- 1-765-496-7143

- 401 N. Grant Street, West Lafayette, IN 47907

- Tuesdays 10:30 to 12:30

Thursdays 10:30 to 11:00 then 12:30 to 14:00 - Book an appointment